How Effective are Your Alerting Rules?

How Effective Are Your Alerting Rules?

Recently, I came across this Reddit post highlighting the challenges of having ineffective alerting rules:

And, here at OnPage we have experience with various companies who have dealt with just that, so I felt I should share some of our top tips for creating effective alerting rules in this blog. Read on to discover…

- Why Too Many Alerts Cause Problems for Incident Teams

- Are Alerts Really Necessary for All Incidents?

- OnPage’s Best Practices for Generating Effective Alerting Rules

Why Too Many Alerts Cause Problems for Incident Teams

Have you ever heard of ‘The Boy Who Cried Wolf?’ It all boils down to that. For those unfamiliar, the story goes like this – there’s a boy who cries on and on about wolves that aren’t really there, yelling for help just to get a reaction. When his family hears this, they go to help, but there is nothing they can do because there is no wolf. Unfortunately, this leads to mental exhaustion and his family stops believing him when he cries “WOLF!” But one day, he sees a wolf and calls out to his family…they don’t come and the boy gets eaten. This is exactly what happens when incident teams experience alert fatigue. Alerts (the boy) go off way too often about issues that cannot be acted upon because they aren’t urgent/actionable issues. So, incident responders (the family) eventually become desensitized to incident alerts and stop responding altogether. Essentially speaking, when a real incident happens (the wolf), incident teams sometimes miss or ignore those critical incidents, leading to a large impact on their organization. Which is why, teams must evaluate their alerting rules, so they don’t get eaten 😉

Are Alerts Really Necessary for All Incidents?

To lessen the alert burden, teams must evaluate their alerting rules…because in many cases alert thresholds are set too low. Organizations are often under the assumption that they should be setting up as many alerts as possible. However, that cannot be further from the truth. As mentioned before, alert fatigue is a common consequence of an overabundance of alerts. So, to start your journey to successful alerting rules, I wanted to share some of the “good alerts” shared across the Reddit thread:

Service Availability – Impacts on service operations including outages, performance degradation, and high latency.

Error Rates – Heightened cases of errors or failed requests.

Security Incidents – Potential breaches, detected vulnerabilities, suspicious activity.

Deployment Problems – Deployment failures.

SLA Breach Notifications – Unresolved issues exceeding the time limit determined by SLAs.

What constitutes a critical threshold for one company may not hold the same weight for another. It’s crucial for teams to collaborate and define these thresholds based on their unique environment and priorities. As systems evolve and demands change, these thresholds should also be regularly reassessed to ensure alerts remain meaningful, avoiding unnecessary distractions that contribute to alert fatigue.

Simply put, by creating alerts for only the incidents that need immediate attention, organizations can reduce alert noise and ensure the productivity and satisfaction of their team.

OnPage’s Best Practices for Generating Effective Alerting Rules

Prioritize Alerts – Not all alerts are created equal, so teams must prioritize alerts based on severity to ensure that urgent alerts are immediately tended to. This can significantly help on-call teams allocate their time and resources to the most high-priority incidents.

Avoid Redundant Alerts – Sometimes the same issue triggers multiple alerts, creating unnecessary alert noise. So, teams should invest in monitoring tools with correlation analysis capabilities to ensure that only one alert is sent for a single root cause even if several are generated initially.

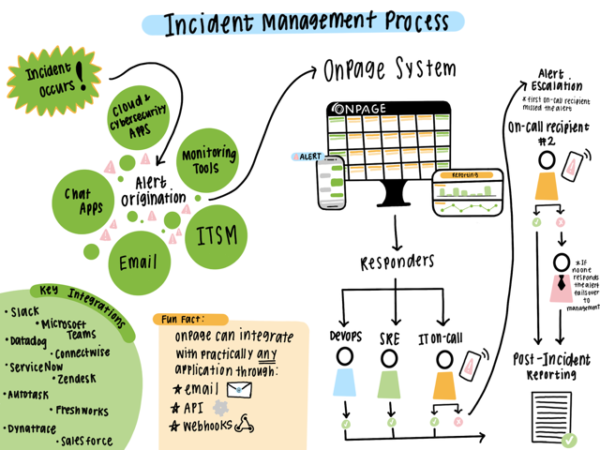

Implement Escalation Policies – Teams should implement alerting solutions, like OnPage, that always alert the right on-call team member in the case of incidents. Plus, these solutions allow teams to automatically escalate alerts when the primary responder misses the notification – so that no incidents go unnoticed.

Regularly Review Alerting Rules – Over time, teams may find that their alert thresholds are still set too low. By periodically reviewing the effectiveness of their alerts, teams can continue to eliminate excessive alerts.

Use Alert Enrichment – Providing detailed context within alerts, teams can quickly assess incidents without having to take extra time gathering information. With alert enrichment, teams can improve their incident response times by immediately gaining visibility into incident details.

OnPage as a Solution

OnPage’s incident alert management solution can solve the problem of excessive alerting through:

Distinguishable High and Low Priority Alerts – With OnPage, teams can deliver loud, high-priority alerts that even bypass the silent switch and are easily distinguishable from low-priority alerts or any other push notification the on-call responder may receive.

Role-Based Alerting – OnPage allows teams to route incident alerts to the engineer based on on-call schedules, ensuring that the right people get the alert every single time and are efficiently mobilized to the situation.

Robust Integrations with Monitoring and Ticketing Solutions – Integrate your existing monitoring tools with OnPage so that an incident alert is triggered right when your monitoring tool detects an issue. Teams can set up alerting rules that ensure only meaningful alerts are delivered to the on-call team.